One of the many challenges when building an application is ensuring that it’s secure. Whether you’re storing hashed passwords, sanitizing user inputs, or even just constantly updating package dependencies to the latest and greatest, the effort to attain a secure application is never-ending. And even though containerization has made it easier to ship better software faster, there are still plenty of considerations to take when securing your infrastructure as well.

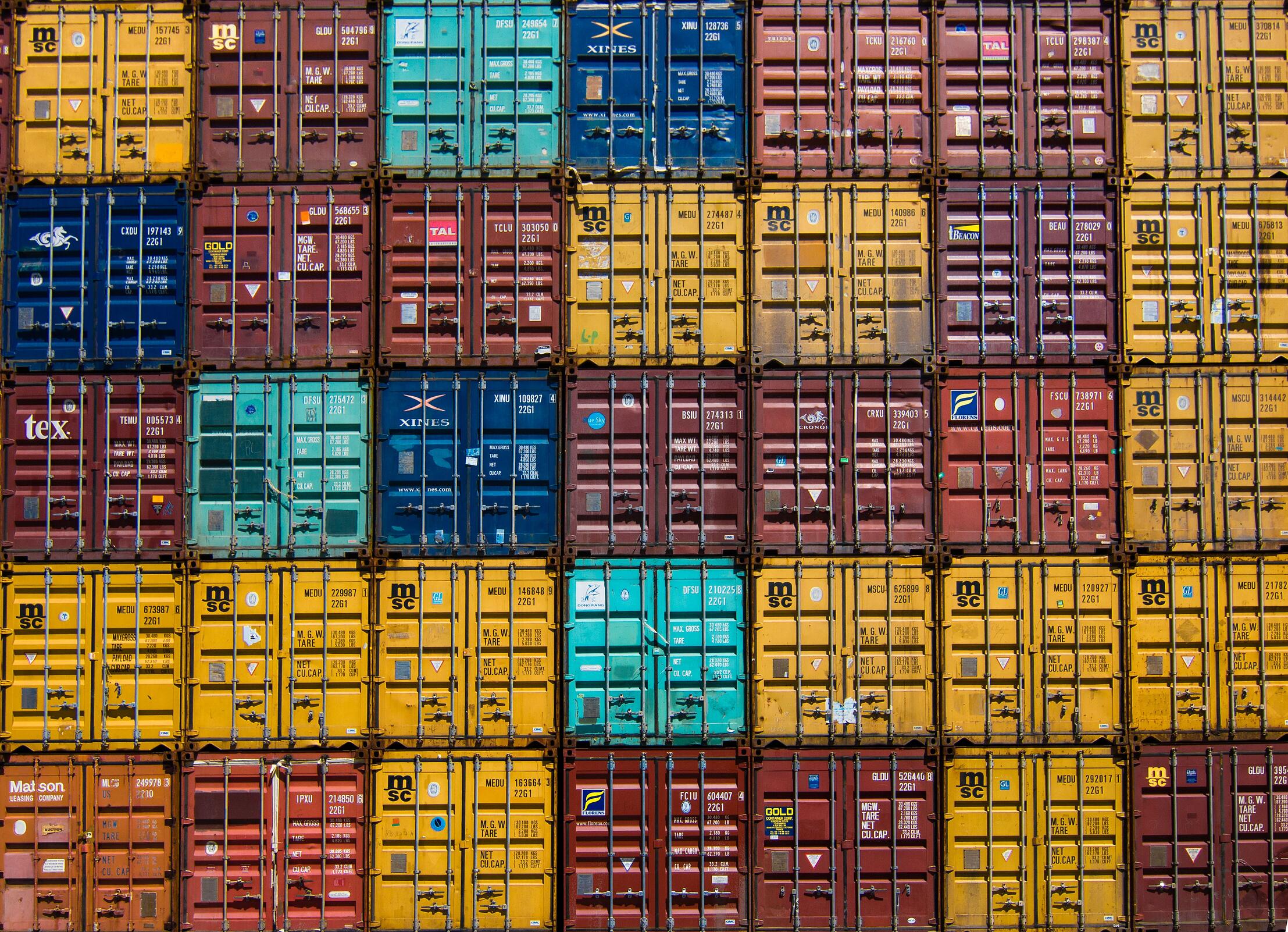

Photo by Guillaume Bolduc on Unsplash

Photo by Guillaume Bolduc on Unsplash

If your application uses Docker as its containerization platform, there are several baseline tactics you can take to ensure that you’re optimizing for safety. In this post, I’ll go over some best practices when it comes to protecting your containers from malicious actors. Although I’m going to focus specifically on Docker and Kubernetes — they are the most popular options, after all — these guidelines are broadly applicable to any containerization solution!

Treat it like a real machine

Many make the assumption that, because processes within a container are isolated they are inherently secure. It’s possible to have multiple applications running within a single host, and as such, it’s important to grant access to resources for the least privileged user.

In a “traditional” desktop environment, that means:

- Making sure that directories and files are not world-writable

- Running containers with a non-

rootuser - Using features like namespaces or cgroups to classify who has access to what

Only use images from trusted sources

Open source is great, isn’t it? If you need to bring an OS, language, or application image into your Docker container, chances are that someone out on the internet has already made one for you. However, pulling in a public repository can only look like something you need. You’re placing a lot of trust in the author, and even if you do go through the container line-by-line, you don’t know for certain whether corrupted files are included.

There are plenty of reputable sources to fetch from. Don’t run the risk of grabbing something that isn’t hosted in a validated package registry. Docker Hub, for example, automatically scans containers, and even provides certification that ensures the container is legitimate.

But as recent history would suggest, implicitly trusting Docker Hub isn’t enough. For production environments, you will want to have your Docker client enforce content trust. This will instruct your client to only use images that have been cryptographically signed by the image authors. You can read more about how it works here.

Use Docker Bench for Security

It’s inevitable that the likelihood of security issues increases as time goes on. Rather than attempting to constantly stay on top of these problems, why not have a script do that for you?

Docker Bench for Security is an official service from the Docker crew. It’s a small bash program that ensures that your Docker container is deployed using the recommended best practices. You can easily incorporate this into your existing CI workflow, either by bringing it into your docker-compose.yml file, or by cloning the repo and running the provided shell script.

Limit direct access to Kubernetes nodes

Kubernetes is a popular platform for orchestrating Docker containers across a fleet of hosts. Just as with any part on your network, access to the server that runs Kubernetes should be limited to a very small subset of technical administrators. You should set up IAM policies that guarantee this.

Preventing SSH access to the nodes entirely also mitigates the risk for unauthorized access to the Kubernetes host machine. If your developers need to run commands against a node, they should do it using kubectl exec. This grants them direct access to the container’s environment without the ability to access the host itself.

Isolating nodes within Kubernetes

Since several nodes can run within a single cluster, and Kubernetes can manage several different clusters, it’s essential to limit the scope of permissions between the clusters. That way, if one container or cluster is compromised, others in the fleet won’t be affected.

Kubernetes offers namespaces to partition shared resources into groups. Resources from one namespace can be hidden from other namespaces. By default, every resource is grouped into a namespace called “default,” so it’s important to take a look at your architecture and readjust the resource allocations as necessary. Kubernetes’ authorization plugins can help you create policies that divide resources into namespaces that are shared between different users. That way, every group can have an allocation explicitly defined, which provides assurance that no compromised machine will chew up your cloud service bill or affect your users.

Protecting the network

Even after verifying how your Docker container runs and locking down access to Kubernetes, you’ll still want to create some kind of segmentation within your overall network. The goal is to limit any cross-cluster communication to continue reducing the effect of any potentially exploited vulnerability.

Kubernetes provides documentation on defining automatic firewall rules between the dynamic IPs of containers. Beyond that, setting up Ingress configurations also allows you to define which services can be explicitly accessed via an HTTP API.

Getting more help

I’ve only just scratched the surface of how to secure the Docker containers that run your applications. Every operational layer has different considerations to consider. There are plenty of additional resources online with even more best practices: in terms of automation, Snyk has some guidelines on integrating best practices within your CI pipeline, and this nifty cheat sheet also breaks down how to defend against the different types of potential exploits.

The bottom line is that while applications inside containers are isolated, they are not invincible. Docker enables developers to make changes quickly, but this flexibility also brings with it additional security considerations. Stay safe!

Sensu is the future-proof solution for multi-cloud and container monitoring at scale. To learn more, visit sensu.io.