Ben Abrams is a Sensu Community maintainer who helps tame over 200 repositories for plugins, documentation, tooling, and Chef. The following post is based on a talk Ben gave at Sensu Summit, which was not only well received (he was asked by several people to share his experiences in more detail than time allowed) but was also full of Sensu best practices. In part 1 of this series, he discusses the real costs of alert fatigue, plus a few steps you can take to mitigate.

The problem: the real costs of alert fatigue

If you’re not familiar with alert fatigue, here’s the short version:

Alert fatigue occurs when one is exposed to a large number of frequent alarms (alerts) and consequently becomes desensitized to them.

This problem is not specific to technology fields: most jobs that require on-call, such as doctors, experience it in slightly different manners, but the problem is the same. In our industry, the following are common costs of engineers or operators experiencing alert fatigue:

- Issues (and causes) are lost. We are not computers: our buffer is not as extensive as RabbitMQ.

- Costly extended outages, caused by either silencing alerting or the inability to find the right information to properly triage amongst the storm of data/alerts.

- Burnout/lack of retention: overloaded engineers who are always on-call and being bombarded tend to leave within a few years.

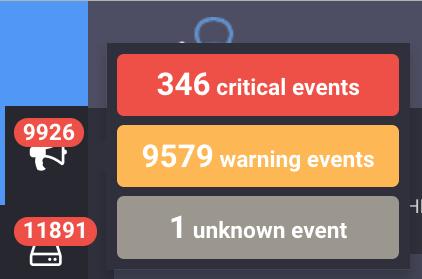

The above screenshot was taken from a real production system (I did not hack the JavaScript in Uchiwa to inflate) and demonstrates how the multitude of alerts can make it impossible to prioritize fixing issues.

When alert fatigue bleeds into your personal life

When alert fatigue bleeds into your personal life

The solution: rethinking alerting + using the right tools

Addressing (and eliminating) alert fatigue isn’t just about having the right tooling in place (but I’ll get to that later). By rethinking how you approach monitoring and alerting, you can make huge strides:

- Not actionable == not my problem: you can still monitor events, just don’t alert on something without there being an impact and a specific task that can be made to resolve the issue.

- Hold non urgent (as opposed to critical) alerts until morning: even if an alert is critical, it may not be urgent, as it may not affect service availability or performance (depending on the failure, HA setups, etc). Waking up engineers on a regular basis leads to burnout and silencing/ignoring alerts. In some rare cases, it can motivate people to fixing root causes, but if it’s not urgent then it probably isn’t worth the strain on your engineers.

- Consolidate alerts: aggregate results of your monitoring events (more on how to do this later).

- Ensure that alerts come with contextual awareness: e.g., tripping a CPU threshold to show the top 10 processes sorted by CPU utilization. If you see it’s a database backup process running, consider adjusting thresholds or creating a mechanism to ignore those events during the expected time frame.

- Service ownership: get the alerts to the right team. Have teams take ownership of their products, which includes taking alerts for issues that they’re best suited to resolve.

- Effective on-call scheduling: make sure you have enough people to allow a rotation and allow people to recover from being on-call. It is stressful and can help lead to or facilitate a decline in one’s health — I speak from personal experience.

- Wake me up when it’s over: during an alert storm (major outage), silence the alerts to allow teams to focus on recovery rather than acknowledging alerts every few minutes.

- Review monitoring at the end of your on-call handoffs: often called a “Turnover report,” this details all the urgent alerts that the on-call engineer gets and responds to. The report typically includes a link to the alert, responding engineers, resolution, whether the alert was actionable, and monitoring recommendations or service changes.

These are some great first steps for rethinking how you approach alerting. Starting in part 2 of this series, we’ll cover how you can put some of these suggestions into action, plus tips for fine-tuning Sensu.

Read more in this series: